AI Glasses: The Dawn and Dilemma of the Next Mobile Terminal

When Xiaomi AI Glasses entered the consumer market in 2025 at a price of 1,999 yuan, selling over 70,000 units in the first week yet facing a 40% high return rate; when Meta Ray-Ban Display sold out within 48 hours of its U.S. launch, with Daigou (cross-border shopping) prices on Xianyu surging by nearly 100%—this industry frenzy and reality check surrounding AI glasses unfolded simultaneously. From feature phones to smartphones, every iteration of mobile terminals stems from interaction revolutions and scenario reconstruction. Today, AI glasses stand at this historical juncture: they carry the expectation of being the “gateway to the spatial computing era” while confronting dual challenges from technology and the market.(Meta Reports Fourth Quarter and Full Year 2024 Results)

Technological Breakthroughs: From Concept Devices to Consumer-Grade Products

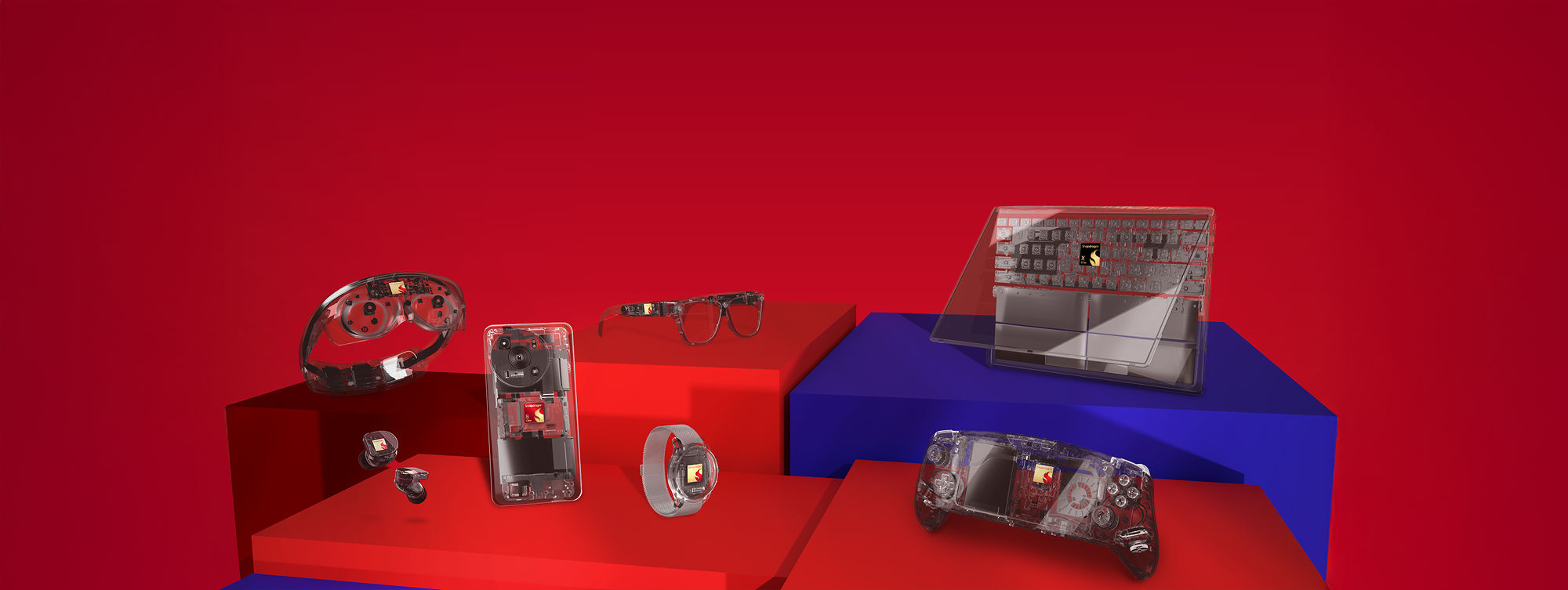

The rise of AI glasses first relies on continuous breakthroughs in underlying technologies, which form the core foundation for their potential as the next-generation terminal. In the chip sector, Qualcomm’s first-generation Snapdragon AR1+ platform achieved a 20% reduction in size and supports on-device operation of small language models with 1 billion parameters. This enables products like Rokid Glasses to reduce weight while increasing battery life by 30%.

In display and interaction technologies, innovations have made “seamless wearing” a reality. Meta Ray-Ban Display adopts non-intrusive HUD (Head-Up Display) technology, creating a hidden color display area at the lower edge of the right lens. It neither blocks the user’s field of vision nor fails to present key information such as navigation and notifications in real time. Combined with a lightweight design of 69 grams, it completely breaks free from the bulkiness of early AR devices.

Market Signals: Growth on the Eve of Explosion